The exponential digital social world

Tech-savvy start-ups with natively digital business models regard this point in time as the best time in the history of the world to invent something. The world is buzzing with technology-driven opportunities leveraging the solid platform provided over the past 30 years, birthed from

- the Internet,

- then mobility,

- social

- and now the massive scale of cloud computing and the Internet of Things (IoT).

For the start-up community, this is a

- platform for invention,

- coupled with lowered / disrupted barriers,

- access to venture capital,

- better risk / benefit ratios

- and higher returns through organisational agility.

Kevin Kelly, co-founder of Wired magazine believes we are poised to create truly great things and that what’s coming is exponentially different, beyond what we envisage today – ‘Today truly is a wide open frontier. We are all becoming. It is the best time ever in human history to begin’ (June 2016). Throughout history, there have been major economic and societal shifts and the revolutionary nature of these is only apparent retrospectively – at the time the changes were experienced as linear and evolutionary. But now is different. Information access is globalised and is seen as a democratic right for first world citizens and a human right for the less advantaged.

The genesis was the Internet and the scale is now exponential because cloud-based platforms embed connections between data, people and things into the very fabric of business and daily life. Economies are information and services-based and knowledge is a valued currency. This plays out at a global, regional, community and household level. Pro-active leaders of governments, businesses and communities addressing these trends stress the need for innovation and transformative change (vs incremental) to shape future economies and societies across the next few years. In a far reaching example of transformative vision and action, Japan is undertaking ‘Society 5.0’, a full national transformation strategy including policy, national digitisation projects and deep cultural changes. Society 5.0 sits atop a model of five waves of societal evolution to a ‘super smart society’. The ultimate state (5.0) is achieved through applying technological advancements to enrich the opportunities, knowledge and quality of life for people of all ages and abilities.

The Society 5.0 collaboration goes further than the digitisation of individual businesses and the economy, it includes all levels of the Japanese society, and the transformation of society itself. Society 5.0 is a framework to tackle several macro challenges that are amplified in Japan, such as an ageing population – today, 26.3% of the Japanese population is over 65, for the rest of the world, 20% of people will be over 60 by 2020. Japan is responding through the digitisation of healthcare systems and solutions. The increased mobility and flexibility of work to keep people engaged in meaningful employment, and the digitisation of social infrastructure across communities and into homes. This journey is paved with important technology-enabled advances, such as

- IoT,

- robotics,

- artificial intelligence,

- virtual and augmented reality,

- big data analytics

- and the integration of cyber and physical systems.

Japan’s transformation approach is about more than embracing digital, it navigates the perfect storm of technology change and profound changes in culture, society and business models. Globally, we are all facing four convergent forces that are shaping the fabric of 21st century life.

- It’s the digital social world – engaging meaningfully with people matters, not merely transacting

- Generational tipping point – millennials now have the numbers as consumers and workers, their value systems and ways of doing and being are profoundly different

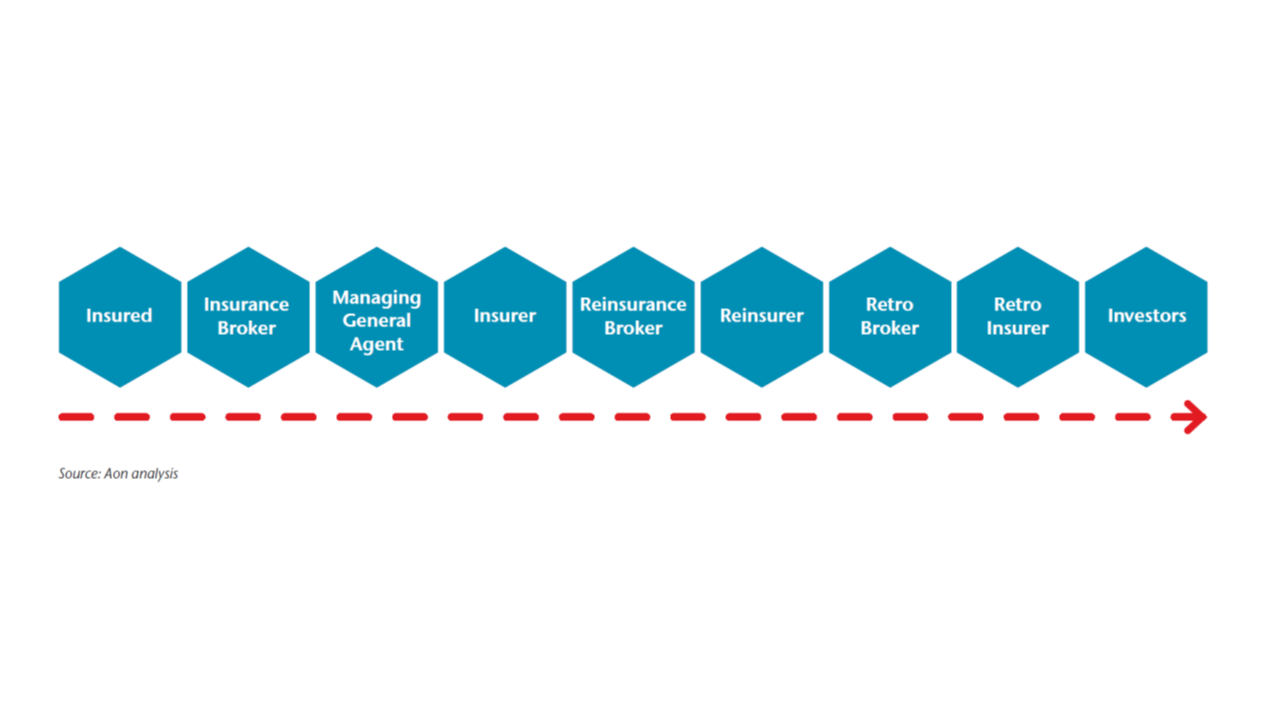

- Business models – your value chain is no longer linear, you are becoming either an ecosystem platform or a player / supplier into that ecosystem

- Digital is ubiquitous – like particles in the atmosphere, digital is all around us, connecting people, data and things – it’s the essence of 21st century endeavours

How do leaders of our iconic, successful industrial era businesses view this landscape? Leaders across organisations, governments and communities are alert to the opportunities and threats from an always on economy. Not all leaders are confident they have a cohesive strategy and the right resources to execute a transformative plan for success in this new economy of knowledge, digital systems and the associated intangible assets – the digital social era. RocketSpace, a global ecosystem providing a network of campuses for start-up acceleration, estimate that 10 years from now, in 2027, 75% of today’s S&P 500 will be replaced by digital start-ups (RocketSpace Disruption Brief, March 2017). Even accounting for some potential skew in this estimate, we are in the midst of unprecedented change.

What is change about?

What are the strategic assets and capabilities that an organisation needs to have when bridging from the analogue to the digital world? Key to succeeding in this is taking the culture and business models behind successful start-ups and imbuing them into the mature enterprise. Organisations need to employ outside-in, stakeholder-centric design-thinking and adopt leveraged business models that create

- scaled resources,

- agility,

- diversity of ideas

and headspace to

- explore,

- experiment,

- fail and try again.

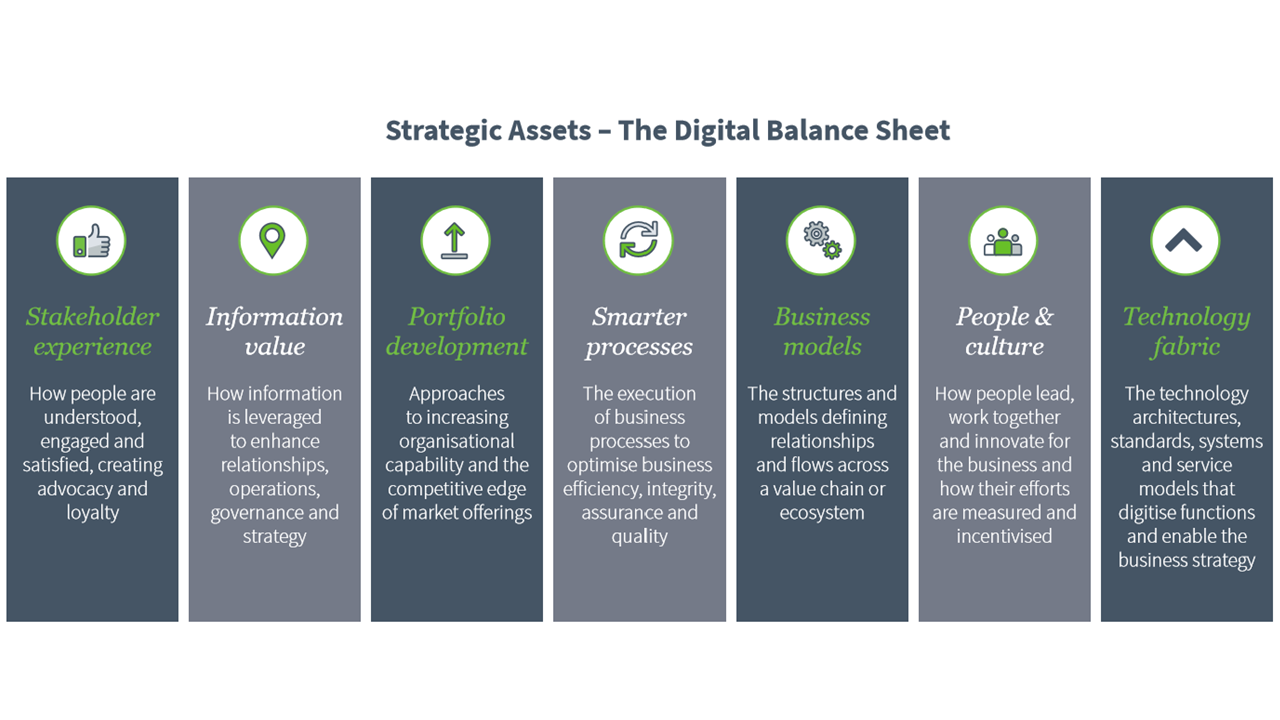

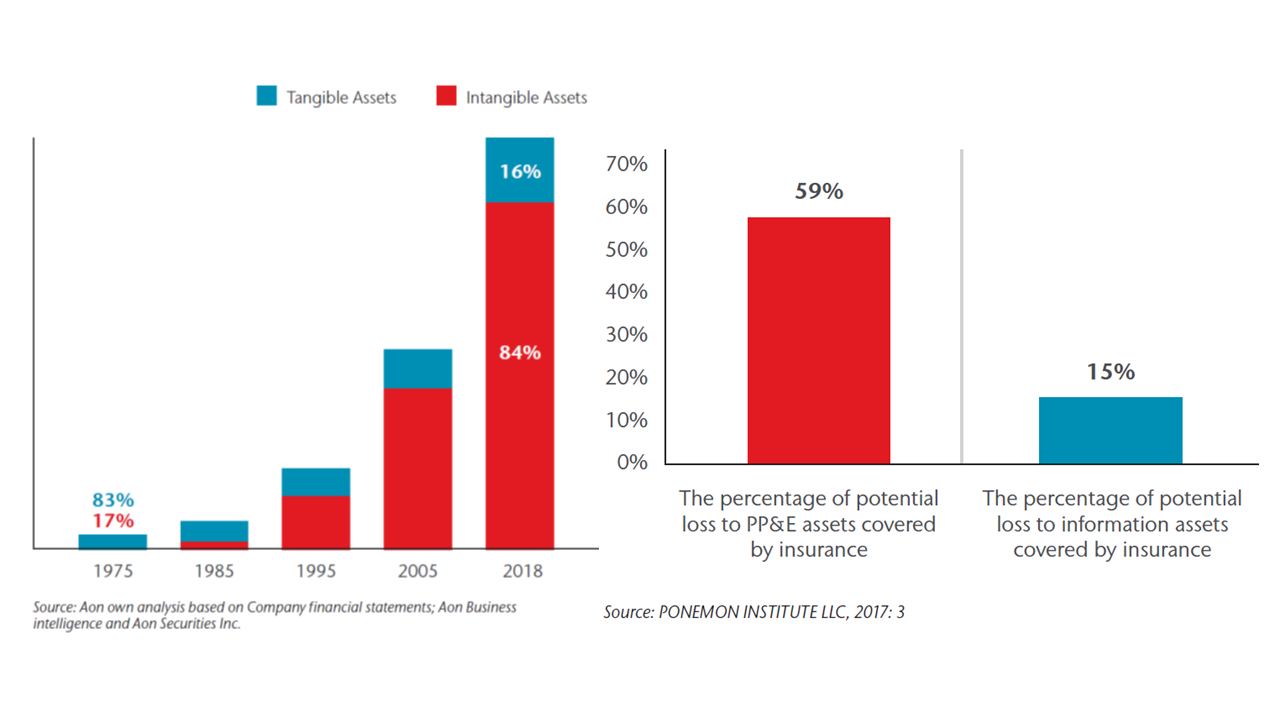

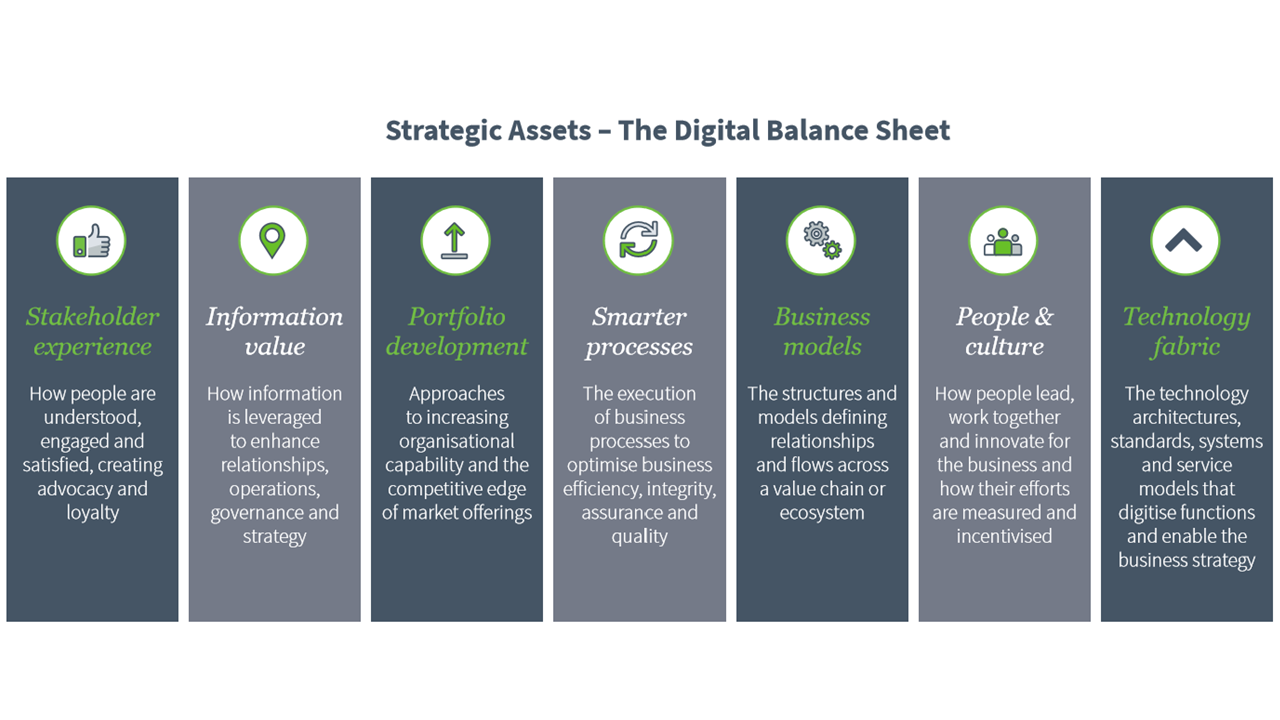

The need to protect existing assets and sources of value creation remains important. However, what drives value is changing, so a revaluation of portfolios is needed against a new balance sheet, the digital social balance sheet.

The Dimension Data Digital Social Balance Sheet evolved from analysing transformational activities with our global clients from the S&P500, the government sector, education and public health sectors and not-for-profits. We also learnt from collaborations with tech start-ups and our parent company, Nippon Telegraph and Telephone Group’s (NTT) R&D investment activities, where they create collaborative ecosystems referred to as B2B2X. The balance sheet represents the seven top level strategic capabilities driving business value creation in the digital social era. This holds across all industries, though it may be expressed differently and have different relative emphasis for various sectors – for example, stakeholders may include employees, partners, e-lance collaborators, customers, patients, shareholders or a congregation.

Across each capability we have defined five levels of maturity and this extends the balance sheet into the Dimension Data Digital Enterprise Capability Maturity Model. This is an holistic, globally standardised framework. From this innovative tool, organisations can

- assess themselves today,

- specify their target state,

- conduct competitive benchmarking,

- and map out a clear pathway of transitions for their business and stakeholders.

The framework can also be applied to construct your digital balance sheet reporting – values and measures can be monitored against organisational objectives.

Where does your organisation sit? Thinking about your best and worst experiences with a business or government organisation this year, what is revealed about their capabilities? Across each of the pillars of this model, technology is a foundation and an enabler of progressive maturity. For example, effective data architecture and data management platforming underpins the information value capability of responsiveness. A meaningful capability will be enabled by the virtual integration of hybrid data sources (internal systems, external systems, machines, sensors, social) for enhanced perception, discovery, insight and action by both knowledge workers and AI agents. Uber is a leading innovator in this, and is also applying deep learning, to predict demand and direct supply, not just in time, but just before time. In this, they are exploring beyond today’s proven and mainstream capabilities to generate unique business value.

Below is a high level assessment of three leading digitals at this point in their business evolution – Uber, Alibaba and the Estonian government. We infer their capabilities from our research of their organisational journeys and milestones, using published material such as articles and case studies, as well as our personal experiences engaging with their platforms. Note that each of these businesses’ capabilities are roughly in alignment across the seven pillars – this is key to sustainable value creation. For example, an updated online presence aimed at improving user experience delivers limited value if not integrated in real time across all channels, with information leveraged to learn and deepen engagement and processes designed around user context, able to adapt to fulfil the point in time need.

Innovation horizons

In the model below, key technology trends are shown. We have set out a view of their progression to exponential breakthrough (x axis) and the points at which these technologies will reach the peak of the adoption curve, flipping from early to late adopters (y axis). Relating this to the Digital Enterprise Capability Maturity Model, level 1 and 2 capabilities derive from what are now mature foundations (past). Level 3 aligns with what is different and has already achieved the exponential breakthrough point. Progressing to level 4 requires a preparedness to innovate and experiment with what is different and beyond. Level 5 entails an appetite to be a first mover, experimenting with technologies that will not be commercial for five to ten years, but potentially provide significant first mover advantage. This is where innovators such as Elon Musk’s horizons are set with Tesla and SpaceX.

An example of all of this coming together at level 3 of digital capability maturity and the different horizon – involving cloud, mobility, big data, analytics, IoT and cybersecurity – to enable a business to transform, is Amoury Sport Organisation (A.S.O.) and their running of the Tour de France. The Tour was conceived in 1903 as an event to promote and sell A.S.O.’s publications and is today the most watched annual sporting event in the world. Spectators, athletes and coaches are hungry for details and insights into the race and the athletes. Starting from the 2015 Tour, A.S.O. has leapt forward as a digital business. Data collected from sensors connected to the cyclist’s bike is aggregated on a secure, cloud-based, big data platform, analysed in real time and turned into entertaining insights and valuable performance statistics for followers and stakeholders of the Tour. This has opened up new avenues of monetisation for A.S.O. Dimension Data is the technology services partner enabling this IoT-based business platform.

If your organisation is not yet on the technology transformation path, consider starting now. For business to prosper from the digital economy, you must be platformed to enable success – ready and capable to seamlessly connect humans, machines and data and to assure secure ecosystem flows. The settings of our homes, cars, schools and learning institutions, health and fitness establishments, offices, cities, retail outlets, factories, defence forces, emergency services, logistics providers and other services are all becoming forever different in this digital atmosphere.

Where is your innovation horizon set? The majority of our co-innovation agendas with our clients are focused on the beyond horizons. In relation to this, we see four pairs of interlinked technologies being most impactful –

- artificial intelligence and robotics;

- virtual/ augmented reality and the human machine interface;

- nano-technology and 3D/4D printing,

- and cybersecurity and the blockchain.

Artificial intelligence and robotics

Artificial intelligence (AI) is both a science and set of technologies inspired by the way humans sense, perceive, learn, reason, and act.

We are rapidly consuming AI and embedding it into our daily living, taking it for granted. Think about how we rely upon GPS and location services, use Google for knowledge, expect Facebook to identify and tag faces, ask Amazon to recommend a good read and Spotify to generate a personalised music list, not so long ago, these technologies were awe-inspiring.

Now, and into the next 15 years, there is an AI revolution underway, a constellation of different technologies coming together to propel AI forward as a central force in society. Our relationships with machines will become more nuanced and personalised. There’s a lot to contemplate here. We really are at a juncture where discussion is needed at all levels about the ways that we will and won’t deploy AI to promote democracy and prosperity and equitably share the wealth created from it.

The areas in which this will have the fastest impact are transportation, traditional employment and workplaces, the home, healthcare, education, public safety and security and entertainment. Let’s look at examples from some of these settings:

Transportation – Autonomous vehicles encapsulate IoT, all forms of machine learning, computer vision and also robotics. This will soon break through the exponential point, once the physical hardware systems are robust enough.

Healthcare – there is significant potential for use of AI in pure and applied research and healthcare service delivery, as well as aged and disability related services. The collection of data from clinical equipment e.g. MRI scanners and surgical robots, clinical electronic health records, facility-based room sensors, personal monitoring devices, and mobile apps is allowing for more complete digital health records to be compiled. Analysis of these records will evolve clinical understandings. For example, NTT Data provides a Unified Clinical Archive Service for radiologists, providing machine learning interpretation of MRI brain imagery. The service provides digital translations of MRI brain scans and contains complete data sets of normal brain functions (gathered from John Hopkins University in the US). Radiologists are able to quantitatively evaluate their patient results with the normal population to improve diagnostics. Each new dataset adds to the ecosystem of knowledge.

Education – AI promises to enhance education at all levels, particularly in providing personalisation at scale for all learners. Interactive machine tutors are now being matched to students. Learning analytics can detect how a student is feeling, how they will perform and what the best likely interventions to improve learning outcomes are. Online learning has also enabled great teachers to boost their class numbers to worldwide audiences, while at the same time, student’s individual learning needs can be augmented through analysis of their response to the global mentor. Postgraduate and professional learning is set to become more modular and flexible, with AI used to assess current skills and work related projects and match learning modules of most immediate career value – an assemble your own degree approach. Virtual reality along with AI, is also changing learning content and pathways to mastery, and so will be highly impactful. AI will never replace good teaching, and so the meaningful integration of AI with face-to-face teaching will be key.

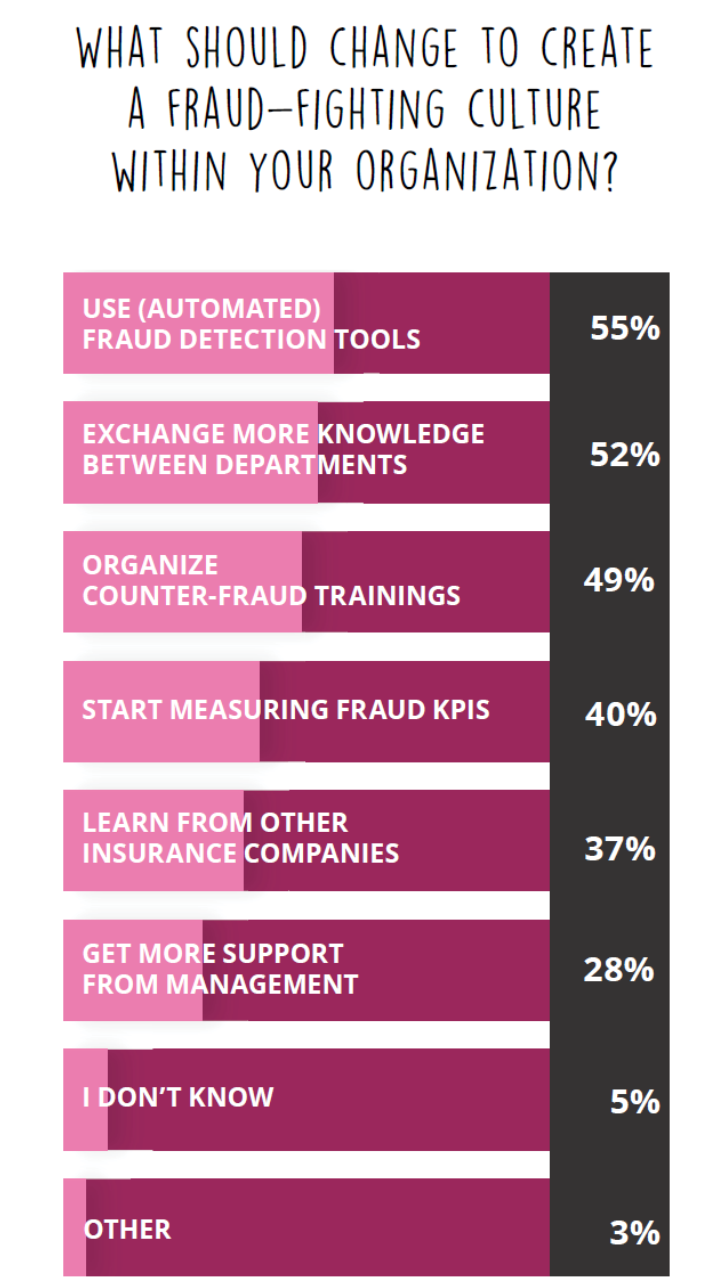

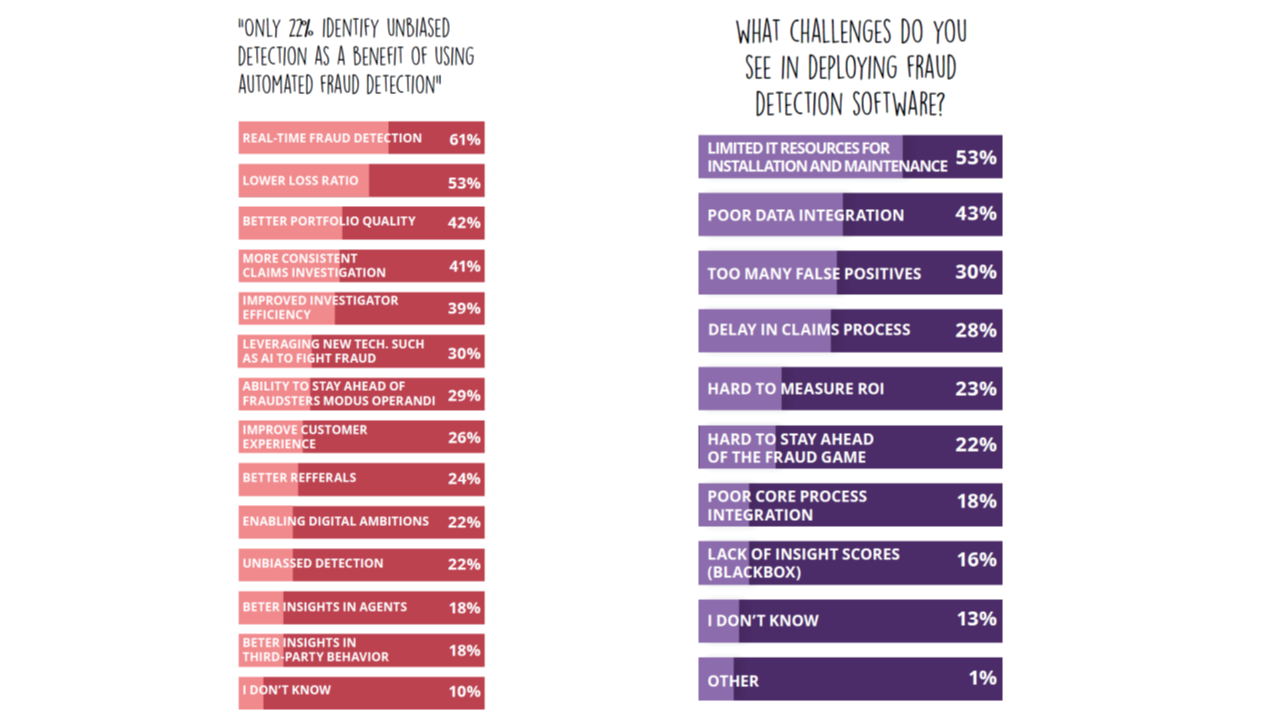

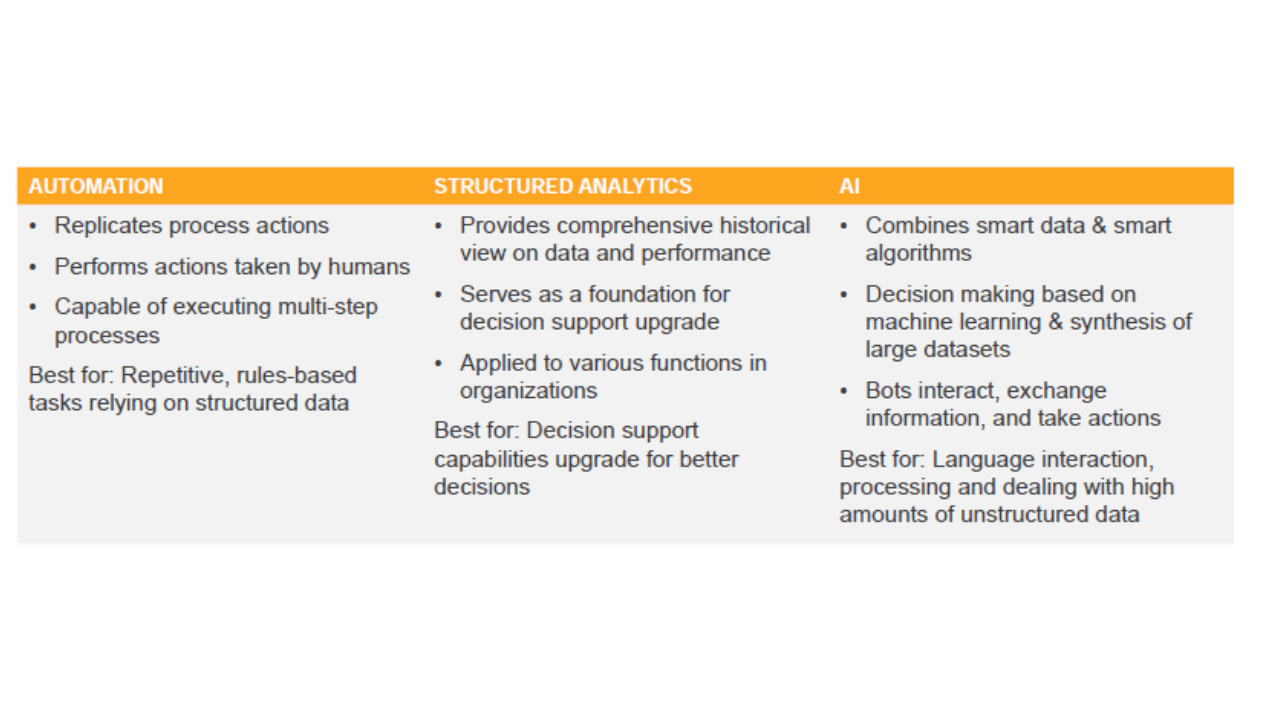

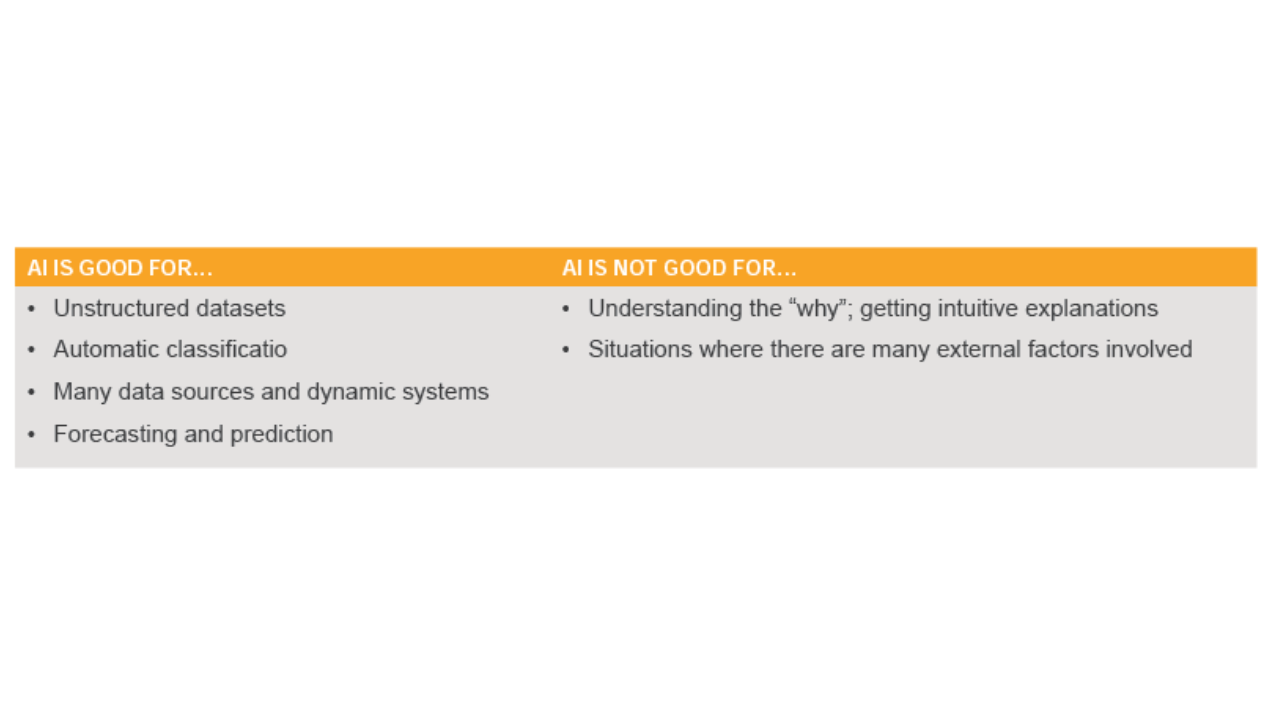

Public safety and security – Cybersecurity is a key area for applied AI. Machine learning from AI against the datasets from ubiquitously placed cameras and drones for surveillance is a key area. In areas of tax, financial services, insurance and international policing, algorithms are improving the conduct of fraud investigations. A significant driver for advances in deep learning, particularly in video and audio processing has come off the back of anti-terrorist analytics. All of these things are now coming together in emergency response planning and orchestration and in the emerging field of predictive policing.

Virtual reality/augmented reality and the human machine interface

The lines between the physical and digital worlds are merging, along the ‘virtuality’ continuum of augmented and virtual reality. Augmented reality (AR) technologies overlay digital information on the ‘real world’, the digital information is delivered via a mechanism, such as a heads-up display, smart glass wall or wrist display. Virtual reality (VR) immerses a person in an artificial environment where they interact with data, their visual senses (and others) controlled by the VR system. Augmented virtuality blends AR and VR. As virtuality becomes part of our daily lives, the way we will interact with each other, learn, work, and transact are being re-shaped.

At the 2017 NTT R&D Fair in Tokyo, the use of VR in sports coaching and the spectator experience was showcased, with participants able to experience playing against elite tennis and baseball players and riding in the Tour de France. A VR spectator experience also enabled the direct experience the rider’s view and the sensation of the rider’s heart rate and fatigue levels. These applications of VR and AI are being rapidly incorporated into sports analytics and coaching.

Other enterprise VR use cases include

- teaching peacekeeping skills to troops in conflict zones,

- the creation of travel adventures,

- immersion in snowy climate terrain to reduce pain for burn victims,

- teaching autistic teenagers to drive,

- and 3D visualisations of organs prior to conducting surgery.

It isn’t hard to imagine the impact on educational and therapeutic services, government service delivery, a shopping experience, on social and cultural immersion for remote communities and on future business process design and product engineering.

Your transformation journey

Every business is becoming a digital business. Some businesses are being caught off guard by the pace and nature of change. They are finding themselves reactive, pulled into the digital social world by the forces of disruption and the new rules of engagement set by clients, consumers, partners, workers and competitors. Getting on the front foot is important in order to control your destiny and assure future success. The disruptive forces upon us present opportunities to create a new future and value for your organisation and stakeholders. There are also risks, but the risk management approach of doing nothing is not viable in these times.

Perhaps your boardroom and executive discussions need to step back from thinking about the evolution of the current business and think in an unconstrained ‘the art of possible’ manner as to the impact of the global digital disruption and sources of value creation into the future. What are the opportunities, threats and risks that these provide? What is in the best interests of the shareholders? How will you retain and improve your sector competitiveness and use digital to diversify?

Is a new industry play now possible? Is your transformed digital business creating the ecosystem (acting as a platform business) or operating within another? How will it drive the business outcomes and value you expect and some that you haven’t envisaged at this point?

The digital balance sheet and seven pillars of digital enterprise capability could be used as the paving blocks for your pathway from analogue to digital. The framework can also guide and measure your progressive journey.

Our experiences with our clients globally show us that the transformation journey is most effective when executed across three horizons of change. Effective three step horizon planning follows a pattern for course charting, with a general flow of:

- Establish – laying out the digital fabric to create the core building blocks for the business and executing the must do/no regret changes that will uplift and even out capability maturity to a minimum of level 2.

- Extend – creating an agile, cross-functional and collaborative capability across the business and executing a range of innovation experiments that create options, in parallel with the key transformative moves.

- Enhance – embedding the digital social balance sheet into ‘business as usual’, and particularly imbuing innovation to continuously monitor, renew and grow the organisation’s assets.

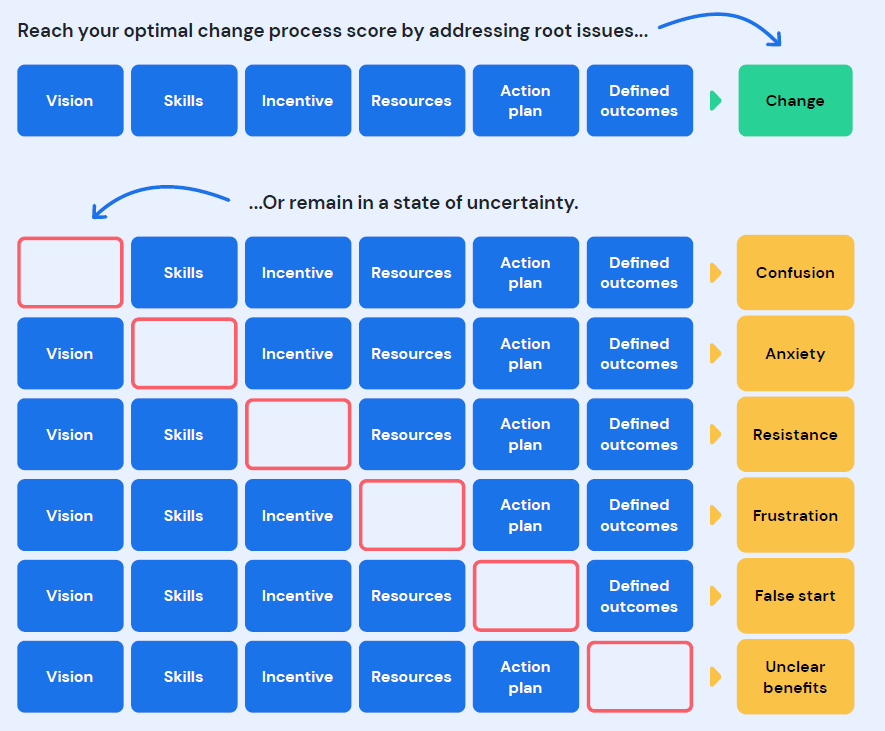

In this, there are complexities and nuances of the change, including:

- Re-balancing of the risk vs opportunity appetite from the board

- Acceptable ROI models

- The ability of the organisation to absorb change

- Dependencies across and within the balance sheet pillars

- Maintaining transitional balance across the pillars

- Managing finite resources – achieving operational cost savings to enable the innovation investment required to achieve the target state

The horizon plans also need to have flex – so that pace and fidelity can be dialled up or down to respond to ongoing disruption and the dynamic operational context of your organisation.

Don’t turn away from analogue wisdom, this is an advantage. Born-digital enterprises don’t have established physical channels and presence, have not experienced economic cycles and lack longitudinal wisdom. By valuing analogue experience and also embracing the essence of outside-in thinking and the new digital social business models, the executive can confidently execute.

A key learning is that the journey is also the destination – by

- mobilising cross functional teams,

- drawing on diverse skills and perspectives,

empowered to act using quality information that is meaningful to them – this uplifts your organisational capabilities and in itself will become one of your most valuable assets.

Click here to access Dimension Data’s detailed study