Data processing has historically been at the very core of the business of insurance undertakings, which is rooted strongly in data-led statistical analysis. Data has always been collected and processed to

- inform underwriting decisions,

- price policies,

- settle claims

- and prevent fraud.

There has long been a pursuit of more granular data-sets and predictive models, such that the relevance of Big Data Analytics (BDA) for the sector is no surprise.

In view of this, and as a follow-up of the Joint Committee of the European Supervisory Authorities (ESAs) cross-sectorial report on the use of Big Data by financial institutions,1 the European Insurance and Occupational Pensions Authority (EIOPA) decided to launch a thematic review on the use of BDA specifically by insurance firms. The aim is to gather further empirical evidence on the benefits and risks arising from BDA. To keep the exercise proportionate, the focus was limited to motor and health insurance lines of business. The thematic review was officially launched during the summer of 2018.

A total of 222 insurance undertakings and intermediaries from 28 jurisdictions have participated in the thematic review. The input collected from insurance undertakings represents approximately 60% of the total gross written premiums (GWP) of the motor and health insurance lines of business in the respective national markets, and it includes input from both incumbents and start-ups. In addition, EIOPA has collected input from its Members and Observers, i.e. national competent authorities (NCAs) from the European Economic Area, and from two consumers associations.

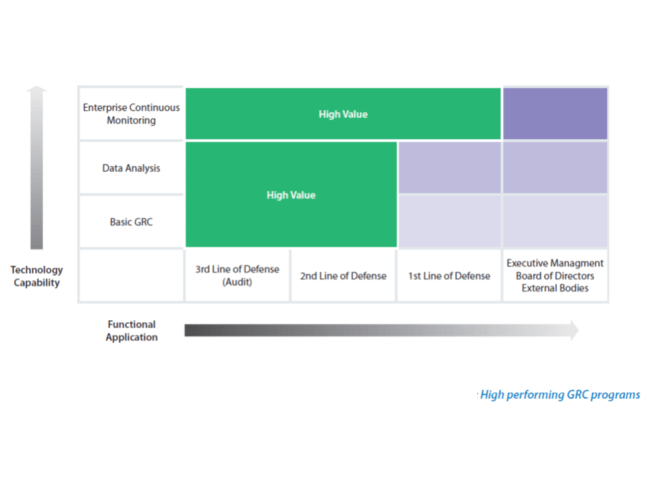

The thematic review has revealed a strong trend towards increasingly data-driven business models throughout the insurance value chain in motor and health insurance:

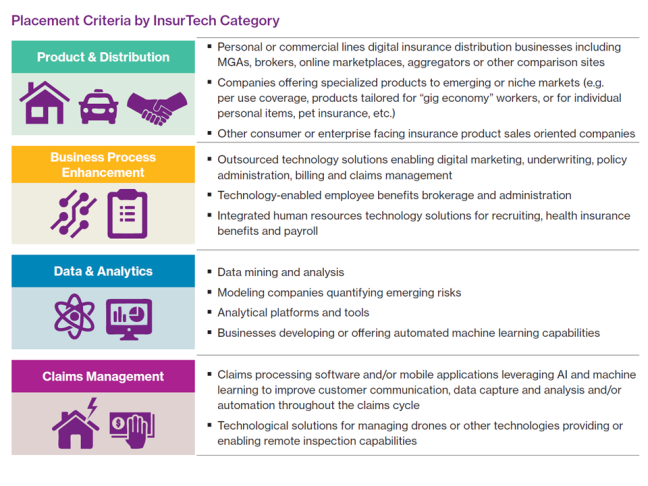

- Traditional data sources such as demographic data or exposure data are increasingly combined (not replaced) with new sources like online media data or telematics data, providing greater granularity and frequency of information about consumer’s characteristics, behaviour and lifestyles. This enables the development of increasingly tailored products and services and more accurate risk assessments.

- The use of data outsourced from third-party data vendors and their corresponding algorithms used to calculate credit scores, driving scores, claims scores, etc. is relatively extended and this information can be used in technical models.

- BDA enables the development of new rating factors, leading to smaller risk pools and a larger number of them. Most rating factors have a causal link while others are perceived as being a proxy for other risk factors or wealth / price elasticity of demand.

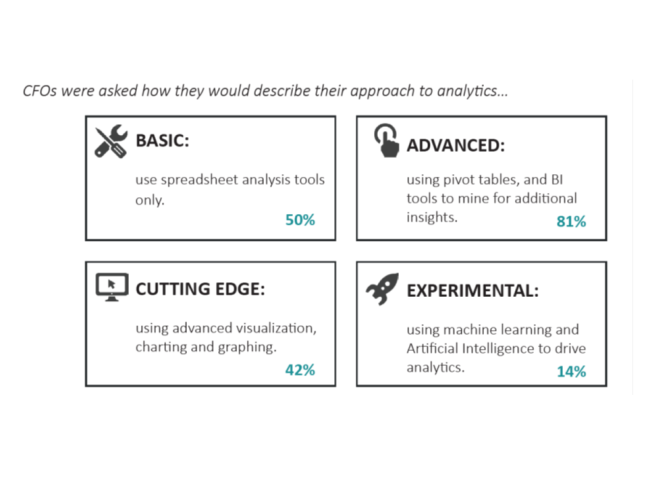

- BDA tools such as such as artificial intelligence (AI) or machine learning (ML) are already actively used by 31% of firms, and another 24% are at a proof of concept stage. Models based on these tools are often cor-relational and not causative, and they are primarily used on pricing and underwriting and claims management.

- Cloud computing services, which reportedly represent a key enabler of agility and data analytics, are already used by 33% of insurance firms, with a further 32% saying they will be moving to the cloud over the next 3 years. Data security and consumer protection are key concerns of this outsourcing activity.

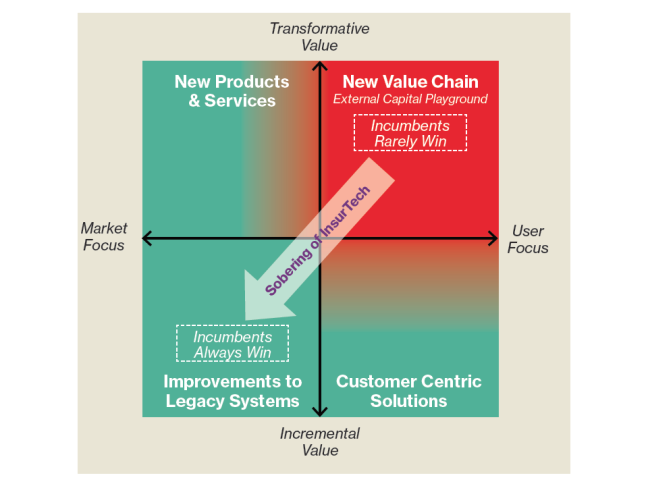

- Up take of usage-based insurance products will gradually continue in the following years, influenced by developments such as increasingly connected cars, health wearable devices or the introduction of 5G mobile technology. Roboadvisors and specially chatbots are also gaining momentum within consumer product and service journeys.

- There is no evidence as yet that an increasing granularity of risk assessments is causing exclusion issues for high-risk consumers, although firms expect the impact of BDA to increase in the years to come.

In view of the evidence gathered from the different stake-holders, EIOPA considers that there are many opportunities arising from BDA, both for the insurance industry as well as for consumers. However, and although insurance firms generally already have in place or are developing sound data governance arrangements, there are also risks arising from BDA that need to be further addressed in practice. Some of these risks are not new, but their significance is amplified in the context of BDA. This is particularly the case regarding ethical issues with the fairness of the use of BDA, as well as regarding the

- accuracy,

- transparency,

- auditability,

- and explainability

of certain BDA tools such as AI and ML.

Going forward, in 2019 EIOPA’s InsurTech Task Force will conduct further work in these two key areas in collaboration with the industry, academia, consumer associations and other relevant stakeholders. The work being developed by the Joint Committee of the ESAs on AI as well as in other international fora will also be taken into account. EIOPA will also explore third-party data vendor issues, including transparency in the use of rating factors in the context of the EU-US insurance dialogue. Furthermore, EIOPA will develop guidelines on the use of cloud computing by insurance firms and will start a new workstream assessing new business models and ecosystems arising from InsurTech. EIOPA will also continue its on-going work in the area of cyber insurance and cyber security risks.