We identify four major planning trends revealed in the data.

-

Trend #1: Aggressively growing companies plan more, plan better, and prioritize planning throughout the organization.

-

Trend #2: Successful companies use enterprise-scale planning solutions.

-

Trend #3: The right decisions combine people, processes, and technology.

-

Trend #4: Advanced analytics yield the insights for competitive advantage.

TREND 01 : Aggressively growing companies prioritize planning throughout the organization

Why do aggressively growing companies value planning so highly? To sustain an aggressive rate of growth, companies need to do two things:

- Stay aggressively attuned to changes in the market, so they can accurately anticipate future trend

- Keep employees across the company aligned on business objectives

This is why aggressively growing companies see planning as critical to realizing business goals.

Putting plans into action

Aggressively growing companies don’t see planning as an abstract idea. They also plan more often and more efficiently than other companies. Compared to their counterparts, aggressively growing companies plan with far greater frequency and are much quicker to incorporate market data into their plans

This emphasis on

- efficiency,

- speed,

- and agility

produces real results. Compared to other companies, aggressively growing companies put more of their plans into action. Nearly half of aggressively growing companies turn more than three-quarters of their plans into reality.

For companies that experience a significant gap between planning and execution, here are three ways to begin to close it:

- Increase the frequency of your planning. By planning more often, you give yourself more flexibility, can incorporate market data more quickly, and have more time to change plans. A less frequent planning cadence, in contrast, leaves your organization working to incorporate plans that may lag months behind the market.

- Plan across the enterprise. Execution can go awry when plans made in one area of the business don’t take into account activities in another area. This disconnect can produce unreachable goals throughout the business, which can dramatically reduce the percentage of a plan that gets executed. To avoid this, create a culture of planning across the enterprise, ensuring that plans include relevant data from all business units.

- Leverage the best technology. As the statistic above shows, the companies who best execute on their plans are those who leverage cloud-based enterprise technology. This ensures that companies can plan with all relevant data and incorporate all necessary stakeholders. By doing this, companies can set their plans up for execution as they are made.

TREND 02 : Successful companies use enterprise-scale planning solutions

Although the idea that planning assists all aspects of a business may seem like common sense, the survey data suggests that taking this assumption seriously can truly help companies come out ahead.

Executives across industries and geographies all agreed that planning benefits every single business outcome, including

- enhancing revenues,

- managing costs,

- optimizing resources,

- aligning priorities across the organization,

- making strategies actionable,

- anticipating market opportunities,

- and responding to market changes.

In fact, 92 percent of businesses believe that better planning technology would provide better business outcomes for their company. Yet planning by itself is not always a panacea.

Planning does not always equal GOOD planning. What prepares a company for the future isn’t the simple act of planning. It’s the less-simple act of planning well. In business planning, band-aids aren’t solutions

What counts as good planning? As businesses know, planning is a complicated exercise,

involving multiple processes, many different people, and data from across the organization. Doing planning right, therefore, requires adopting a wide-angle view. It requires planners to be able to see past their individual functions and understand how changes in one part of the organization affect the organization as a whole.

The survey results suggest that the best way to give planners this enterprise-level perspective is to use the right technology. Companies whose technology can incorporate data from the entire enterprise are more successful. Companies whose planning technology cannot link multiple areas of the organization, or remove multiple obstacles to planning, in contrast, plan less successfully.

Here are three areas of consideration that can help you begin your Connected Planning journey.

- Get the right tools. Uncertainty and volatility continue to grow, and spreadsheets and point solutions lack the agility to pivot or accommodate the volumes of data needed to spot risks and opportunities. Consider tools such as cloud-based, collaborative Connected Planning platforms that use in-memory technology and execute real-time modeling with large volumes of data. Not only can teams work together but plans become more easily embraced and achievable.

- Operate from a single platform with reliable data. Traditionally, companies have used individual applications to plan for each business function. These solutions are usually disconnected from one another, which makes data unreliable and cross-functional collaboration nearly impossible. A shared platform that brings together plans with access to shared data reduces or altogether eliminates process inefficiencies and common errors that can lead to bad decision-making.

- Transform planning into a continuous, connected process. Sales, supply chain, marketing, and finance fulfill different purposes within the business, but they are inextricably linked and rely on each other for success. The ability to connect different business units through shared technology, data, and processes is at the core of a continuous and connected business planning process.

TREND 03 The right decisions combine people, processes, and technology

As businesses examine different ways to drive faster, more effective decision-making, planning plays a critical role in meeting this goal. Ninety-nine percent of businesses say that planning is important to managing costs. According to 97 percent of all survey respondents,

- enhancing revenues,

- optimizing resource allocation,

- and converting strategies into actions

are all business objectives for which planning is extremely crucial. Eighty-two percent of executives consider planning to be “critically important” for enhancing revenues.

For planning to be successful across an organization, it need to extend beyond one or two siloed business units. The survey makes this clear: 96 percent of businesses state that

planning is important for aligning priorities across the organization. Yet even though companies recognize planning as a critical business activity, major inefficiencies exist: 97 percent of respondents say that their planning can be improved.

The more planners, the merrier the planning

When describing what they could improve in their planning, four components were all named essential by a majority of respondents.

- Having the right processes

- Involving the right people

- Having the right data

- Having the right technology

To support strong and effective change management initiatives, successful businesses can build a Center of Excellence (COE). A COE is an internal knowledge-sharing community that brings domain expertise in creating, maturing, and sustaining high-performing business disciplines. It is comprised of an in-house team of subject matter experts who train and share best practices throughout the organization.

By designing a Center of Excellence framework, businesses can get more control over their planning processes with quality, speed, and value, especially as they continue to expand Connected Planning technology into more complex use cases across the company.

Here are six primary benefits that a COE can provide:

- Maintaining quality and control of the planning platform as use case expands.

- Establishing consistency to ensure reliability within best practices and business data.

- Fostering a knowledge-sharing environment to cultivate and develop internal expertise.

- Enabling up- and downstream visibility within a single, shared tool.

- Driving efficiency in developing, releasing, and maintaining planning models.

- Upholding centralized governance and communicating progress, updates, and value to executive sponsors.

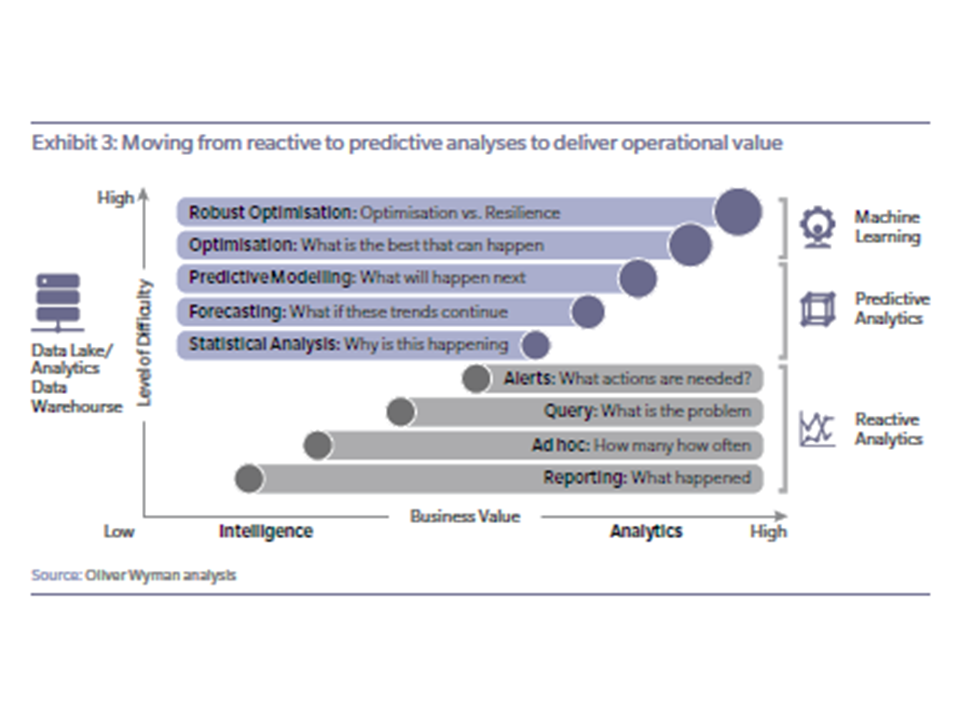

TREND 04 Advanced analytics yield the insights for competitive advantage

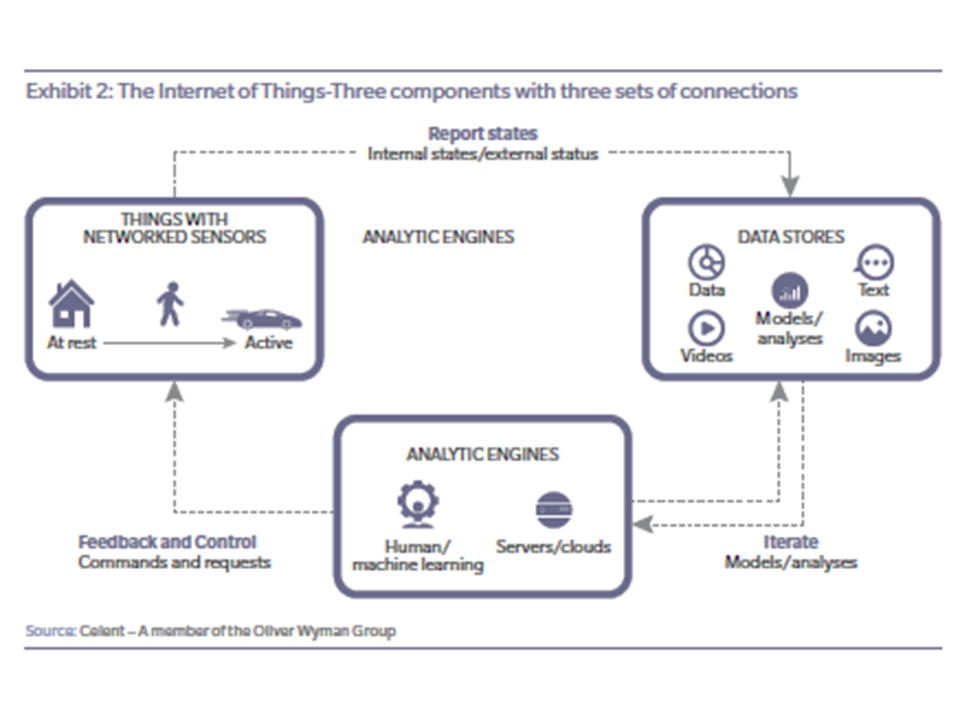

Disruption is no longer disruptive for businesses—it’s an expectation. Wide-spread globalization, fluid economies, emerging technologies, and fluctuating consumer demands make unexpected events and evolving business models the normal course of business today.

This emphasizes the critical need for a more proactive, agile, and responsive state of planning. As the data shows, companies that have implemented a more nimble approach to planning are more successful.

Planners don’t have to look far to find better insights. Companies who plan monthly or more are more likely to quickly incorporate new market data into their plans—updating forecasts and plans, assessing the impacts of changes, and keeping an altogether closer eye on ongoing business performance and targets.

However, not all companies are able to plan so continuously: Almost half of respondents indicate that it takes them weeks or longer to update plans with market changes. For businesses that operate in rapidly changing and competitive markets, this lag in planning can be a significant disadvantage.

Advancements in technology can alleviate this challenge. Ninety-two percent of businesses state that improved planning technology would provide better business outcomes for their company. The C-Suite, in particular, is even more optimistic about the adoption of improved technology: More than half of executives say that adopting better planning technology would result in “dramatically better” business performance.

Planning goes digital

Rather than planners hunting for data that simply validates a gut-feeling approach to planning, the survey results indicate that data now sits behind the wheel—informing, developing, improving, and measuring plans.

Organizations, as well as a majority of executives, describe digital transformation as a top priority. Over half of all organizations and 61 percent of executives say that digital transformation amplifies the importance of planning. As businesses move into the future, the increasing use of advanced analytics, which includes predictive analytics and spans to machine learning and artificial intelligence, will determine which businesses come out ahead.

Roadblocks to data-driven planning

Increasing uncertainty and market volatility make it imperative that businesses operate with agile planning that can be adjusted quickly and effectively. However, as planning response times inch closer to real time, nearly a third of organizations continue to cite two main roadblocks to implementing a more data-driven approach:

- inaccurate planning data and

- insufficient technology

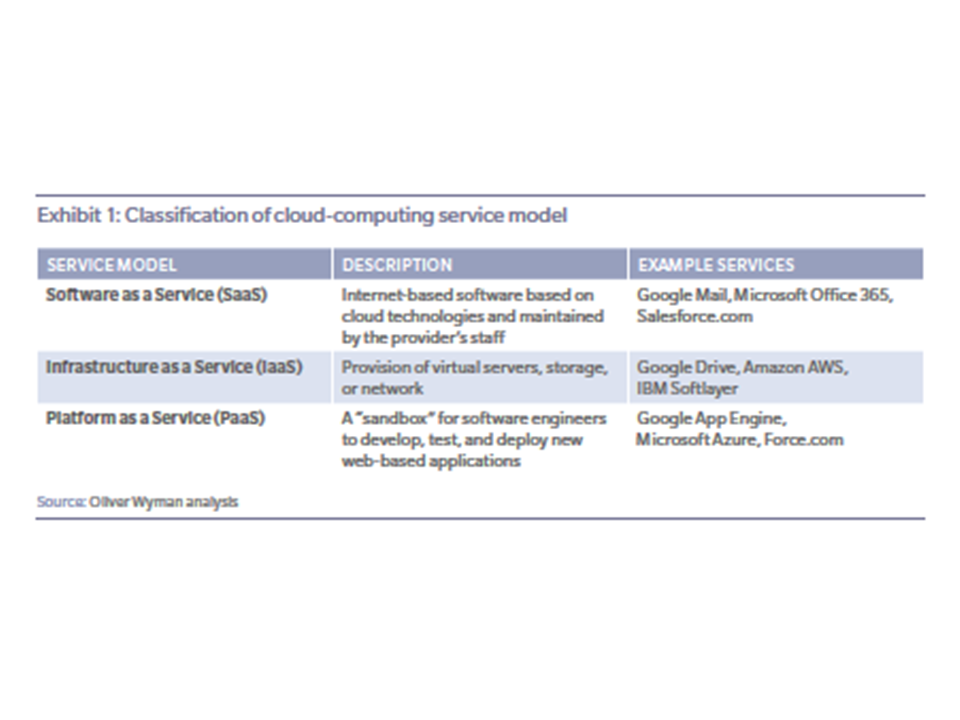

Inaccurate data plagues businesses in all industries. Sixty-three percent of organizations that use departmental or point solutions, for example, and 59 percent of businesses that use on-premises solutions identify “having the right data” as a key area for improvement in planning. The use of point solutions, in particular, can keep data siloed. When data is stored in disparate technology across the organization, planners end up spending more time consolidating systems and information, which can compromise data integrity.

It’s perhaps these reasons that lead 46 percent of the organizations using point and on-premises solutions to say that better technologies are necessary to accommodate current market conditions. In addition, 43 percent of executives say that a move to cloud-based technology would benefit existing planning.

In both cases, data-driven planning remains difficult, as businesses not employing cloud-based, enterprise technology struggle with poor data accuracy. By moving to cloud-based technology, businesses can automate and streamline tedious processes, which

- reduces human error,

- improves productivity,

- and provides stakeholders with increased visibility into performance.

State-of-planning research reveals that organizations identify multiple business planning

obstacles as equally problematic, indicating a need for increased analytics in solutions that can eliminate multiple challenges at once. Nearly half of all respondents shared a high desire for a collaborative platform that can be used by all functions and departments.

Highly analytical capabilities in planning solutions further support the evolving needs of

today’s businesses. In sales forecasting, machine learning methodologies can quickly analyze past pipeline data to make accurate forecast recommendations. When working in financial planning, machine learning can help businesses analyze weather, social media, and historical sales data to quickly discern their impact on sales.

Here are some additional benefits that machine learning methodologies in a collaborative planning platform can offer businesses:

- Manage change to existing plans and respond to periods of uncertainty with accurate demand forecasting and demand sensing

- Develop enlightened operations, real-time forecasting, and smart sourcing and resourcing plans

- Operations that maintain higher productivity and more control with lower maintenance costs

- Targeted customer experience programs that increase loyalty and improve customer engagement

- Products and services that are offered at the right price with effective trade promotions, resulting in higher conversions

Click here to access Anaplan’s detailed White Paper