Customer Experience (CX) is a catchy business term that has been used for decades, and until recently, measuring and managing it was not possible. Now, with the evolution of technology, a company can build and operationalize a true CX program.

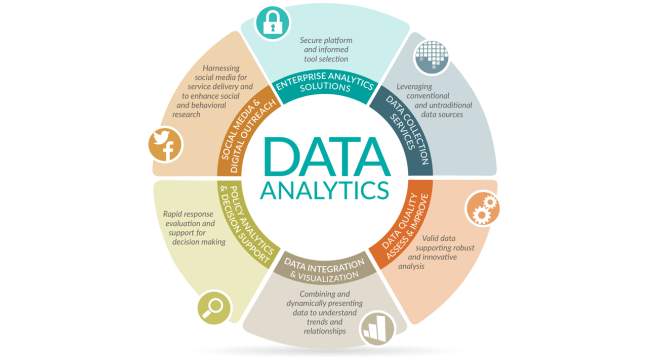

For years, companies championed NPS surveys, CSAT scores, web feedback, and other sources of data as the drivers of “Customer Experience” – however, these singular sources of data don’t give a true, comprehensive view of how customers feel, think, and act. Unfortunately, most companies aren’t capitalizing on the benefits of a CX program. Less than 10% of companies have a CX executive and of those companies, only 14% believe Customer Experience, as a program, is the aggregation and analysis of all customer interactions with the objective of uncovering and disseminating insights across the company in order to improve the experience. In a time where the customer experience separates the winners from the losers, CX must be more of a priority for ALL businesses.

This not only includes the analysis of typical channels in which customers directly interact with your company (calls, chats, emails, feedback, surveys, etc.) but all the channels in which customers may not be interacting directly with you – social, reviews, blogs, comment boards, media, etc.

In order to understand the purpose of a CX team and how it operates, you first need to understand how most businesses organize, manage, and carry out their customer experiences today.

Essentially, a company’s customer experience is owned and managed by a handful of teams. This includes, but is not limited to:

- digital,

- brand,

- strategy,

- UX,

- retail,

- design,

- pricing,

- membership,

- logistics,

- marketing,

- and customer service.

All of these teams have a hand in customer experience.

In order to affirm that they are working towards a common goal, they must

- communicate in a timely manner,

- meet and discuss upcoming initiatives and projects,

- and discuss results along with future objectives.

In a perfect world, every team has the time and passion to accomplish these tasks to ensure the customer experience is in sync with their work. In reality, teams end up scrambling for information and understanding of how each business function is impacting the customer experience – sometimes after the CX program has already launched.

This process is extremely inefficient and can lead to serious problems across the customer experience. These problems can lead to irreparable financial losses. If business functions are not on the same page when launching an experience, it creates a broken one for customers. Siloed teams create siloed experiences.

There are plenty of companies that operate in a semi-siloed manner and feel it is successful. What these companies don’t understand is that customer experience issues often occur between the ownership of these silos, in what some refer to as the “customer experience abyss,” where no business function claims ownership. Customers react to these broken experiences by communicating their frustration through different communication channels (chats, surveys, reviews, calls, tweets, posts etc.).

For example, if a company launches a new subscription service and customers are confused about the pricing model, is it the job of customer service to explain it to customers? What about those customers that don’t contact the business at all? Does marketing need to modify their campaigns? Maybe digital needs to edit the nomenclature online… It could be all of these things. The key is determining which will solve the poor customer experience.

The objective of a CX program is to focus deeply on what customers are saying and shift business teams to become advocates for what they say. Once advocacy is achieved, the customer experience can be improved at scale with speed and precision. A premium customer experience is the key to company growth and customer retention. How important is the customer experience?

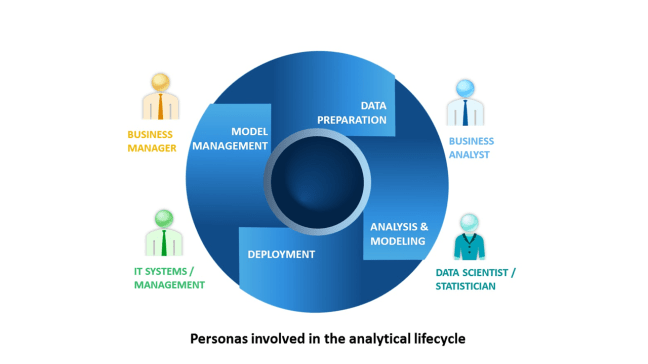

You may be saying to yourself, “We already have teams examining our customer data, no

need to establish a new team to look at it.” While this may be true, the teams are likely taking a siloed approach to analyzing customer data by only investigating the portion of the data they own.

For example, the social team looks at social data, the digital team analyzes web feedback and analytics, the marketing team reviews surveys and performs studies, etc. Seldom do these teams come together and combine their data to get a holistic view of the customer. Furthermore, when it comes to prioritizing CX improvements, they do so based on an incomplete view of the customer.

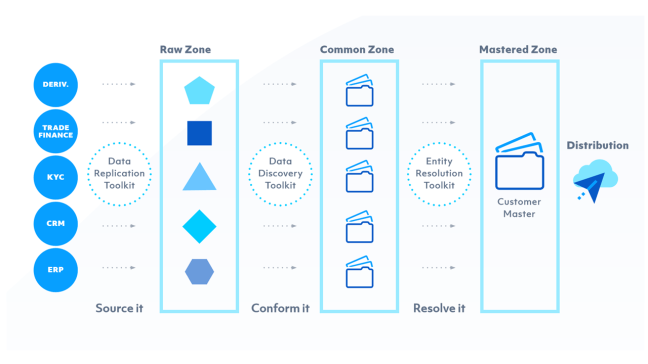

Consolidating all customer data gives a unified view of your customers while lessening the workload and increasing the rate at which insights are generated. The experience customers have with marketing, digital, and customer service, all lead to different interactions. Breaking these interactions into different, separate components is the reason companies struggle with understanding the true customer experience and miss the big picture on how to improve it.

The CX team, once established, will be responsible for creating a unified view of the customer which will provide the company with an unbiased understanding of how customers feel about their experiences as well as their expectations of the industry. These insights will provide awareness, knowledge, and curiosity that will empower business functions to improve the end-to-end customer experience.

CX programs are disruptive. A successful CX program will uncover insights that align with current business objectives and some insights that don’t at all. So, what do you do when you run into that stone wall? How do you move forward when a business function refuses to adopt the voice of the customer? Call in back-up from an executive who understands the value of the voice of the customer and why it needs to be top-of mind for every function.

When creating a disruptive program like CX, an executive owner is needed to overcome business hurdles along the way. Ideally, this executive owner will support the program and promote it to the broader business functions. In order to scale and become more widely adopted, it is also helpful to have executive support when the program begins.

The best candidates for initial ownership are typically marketing, analytics or operations executives. Along with understanding the value a CX program can offer, they should also understand the business’ current data landscape and help provide access to these data sets. Once the CX team has access to all the available customer data, it will be able to aggregate all necessary interactions.

Executive sponsors will help dramatically in regard to CX program adoption and eventual scaling. Executive sponsors

- can provide the funding to secure the initial success,

- promote the program to ensure other business functions work closer to the program,

- and remove roadblocks that may otherwise take weeks to get over.

Although an executive sponsor is not necessary, it can make your life exponentially easier while you build, launch, and execute your CX program. Your customers don’t always tell you what you want to hear, and that can be difficult for some business functions to handle. When this is the case, some business functions will try to discredit insights altogether if they don’t align with their goals.

Data grows exponentially every year, faster than any company can manage. In 2016, 90% of the world’s data had been created in the previous two years. 80% of that data was unstructured language. The hype of “Big Data” has passed and the focus is now on “Big Insights” – how to manage all the data and make it useful. A company should not be allocating resources to collecting more data through expensive surveys or market research – instead, they should be focused on doing a better job of listening and reacting to what customers are already saying, by unifying the voice of the customer with data that is already readily available.

It’s critical to identify all the available customer interactions and determine value and richness. Be sure to think about all forms of direct and indirect interactions customers have. This includes:

These channels are just a handful of the most popular avenues customers use to engage with brands. Your company may have more, less, or none of these. Regardless, the focus should be on aggregating as many as possible to create a holistic view of the customer. This does not mean only aggregating your phone calls and chats; this includes every channel where your customers talk with, at, or about your company. You can’t be selective when it comes to analyzing your customers by channel. All customers are important, and they may have different ways of communicating with you.

Imagine if someone only listened to their significant other in the two rooms where they spend the most time, say the family room and kitchen. They would probably have a good understanding of the overall conversations (similar to a company only reviewing calls, chats, and social). However, ignoring them in the dining room, bedroom, kids’ rooms, and backyard, would inevitably lead to serious communication problems.

It’s true that phone, chat, and social data is extremely rich, accessible, and popular, but that doesn’t mean you should ignore other customers. Every channel is important. Each is used by a different customer, in a different manner, and serves a different purpose, some providing more context than others.

You may find your most important customers aren’t always the loudest and may be interacting with you through an obscure channel you never thought about. You need every customer channel to fully understand their experience.