Uncertainty is inherent to most data and can enter the analysis pipeline during the measurement, modeling, and forecasting phases. Effectively communicating uncertainty is necessary for establishing scientific transparency. Further, people commonly assume that there is uncertainty in data analysis, and they need to know the nature of the uncertainty to make informed decisions.

However, understanding even the most conventional communications of uncertainty is highly challenging for novices and experts alike, which is due in part to the abstract nature of probability and ineffective communication techniques. Reasoning with uncertainty is unilaterally difficult, but researchers are revealing how some types of visualizations can improve decision-making in a variety of diverse contexts,

- from hazard forecasting,

- to healthcare communication,

- to everyday decisions about transit.

Scholars have distinguished different types of uncertainty, including

- aleatoric (irreducible randomness inherent in a process),

- epistemic (uncertainty from a lack of knowledge that could theoretically be reduced given more information),

- and ontological uncertainty (uncertainty about how accurately the modeling describes reality, which can only be described subjectively).

The term risk is also used in some decision-making fields to refer to quantified forms of aleatoric and epistemic uncertainty, whereas uncertainty is reserved for potential error or bias that remains unquantified. Here we use the term uncertainty to refer to quantified uncertainty that can be visualized, most commonly a probability distribution. This article begins with a brief overview of the common uncertainty visualization techniques and then elaborates on the cognitive theories that describe how the approaches influence judgments. The goal is to provide readers with the necessary theoretical infrastructure to critically evaluate the various visualization techniques in the context of their own audience and design constraints. Importantly, there is no one-size-fits-all uncertainty visualization approach guaranteed to improve decisions in all domains, nor even guarantees that presenting uncertainty to readers will necessarily improve judgments or trust. Therefore, visualization designers must think carefully about each of their design choices or risk adding more confusion to an already difficult decision process.

Uncertainty Visualization Design Space

There are two broad categories of uncertainty visualization techniques. The first are graphical annotations that can be used to show properties of a distribution, such as the mean, confidence/credible intervals, and distributional moments.

Numerous visualization techniques use the composition of marks (i.e., geometric primitives, such as dots, lines, and icons) to display uncertainty directly, as in error bars depicting confidence or credible intervals. Other approaches use marks to display uncertainty implicitly as an inherent property of the visualization. For example, hypothetical outcome plots (HOPs) are random draws from a distribution that are presented in an animated sequence, allowing viewers to form an intuitive impression of the uncertainty as they watch.

The second category of techniques focuses on mapping probability or confidence to a visual encoding channel. Visual encoding channels define the appearance of marks using controls such as color, position, and transparency. Techniques that use encoding channels have the added benefit of adjusting a mark that is already in use, such as making a mark more transparent if the uncertainty is high. Marks and encodings that both communicate uncertainty can be combined to create hybrid approaches, such as in contour box plots and probability density and interval plots.

More expressive visualizations provide a fuller picture of the data by depicting more properties, such as the nature of the distribution and outliers, which can be lost with intervals. Other work proposes that showing distributional information in a frequency format (e.g., 1 out of 10 rather than 10%) more naturally matches how people think about uncertainty and can improve performance.

Visualizations that represent frequencies tend to be highly effective communication tools, particularly for individuals with low numeracy (e.g., inability to work with numbers), and can help people overcome various decision-making biases.

Researchers have dedicated a significant amount of work to examining which visual encodings are most appropriate for communicating uncertainty, notably in geographic information systems and cartography. One goal of these approaches is to evoke a sensation of uncertainty, for example, using fuzziness, fogginess, or blur.

Other work that examines uncertainty encodings also seeks to make looking-up values more difficult when the uncertainty is high, such as value-suppressing color pallets.

Given that there is no one-size-fits-all technique, in the following sections, we detail the emerging cognitive theories that describe how and why each visualization technique functions.

Uncertainty Visualization Theories

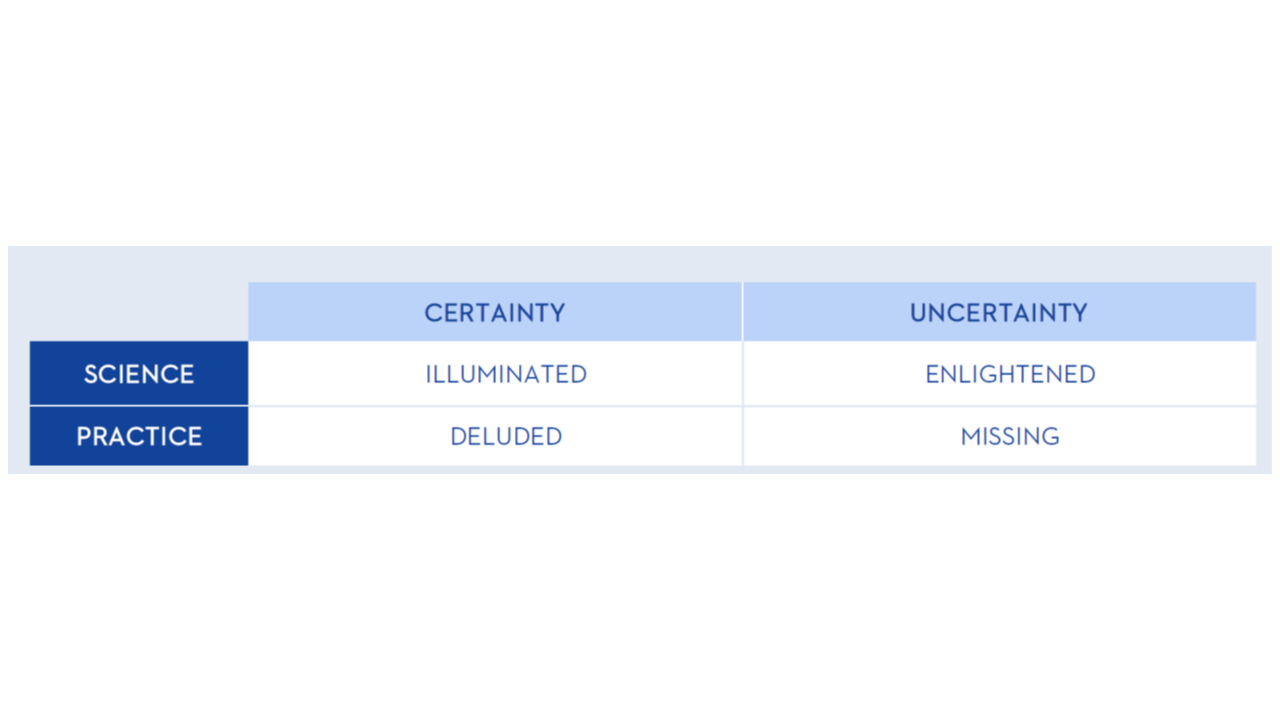

The empirical evaluation of uncertainty visualizations is challenging. Many user experience goals (e.g., memorability, engagement, and enjoyment) and performance metrics (e.g., speed, accuracy, and cognitive load) can be considered when evaluating uncertainty visualizations. Beyond identifying the metrics of evaluation, even the most simple tasks have countless configurations. As a result, it is hard for any single study to sufficiently test the effects of a visualization to ensure that it is appropriate to use in all cases. Visualization guidelines based on a single or small set of studies are potentially incomplete. Theories can help bridge the gap between visualizations studies by identifying and synthesizing converging evidence, with the goal of helping scientists make predictions about how a visualization will be used. Understanding foundational theoretical frameworks will empower designers to think critically about the design constraints in their work and generate optimal solutions for their unique applications. The theories detailed in the next sections are only those that have mounting support from numerous evidence-based studies in various contexts. As an overview, The table provides a summary of the dominant theories in uncertainty visualization, along with proposed visualization techniques.

General Discussion

There are no one-size-fits-all uncertainty visualization approaches, which is why visualization designers must think carefully about each of their design choices or risk adding more confusion to an already difficult decision process. This article overviews many of the common uncertainty visualization techniques and the cognitive theory that describes how and why they function, to help designers think critically about their design choices. We focused on the uncertainty visualization methods and cognitive theories that have received the most support from converging measures (e.g., the practice of testing hypotheses in multiple ways), but there are many approaches not covered in this article that will likely prove to be exceptional visualization techniques in the future.

There is no single visualization technique we endorse, but there are some that should be critically considered before employing them. Intervals, such as error bars and the Cone of Uncertainty, can be particularly challenging for viewers. If a designer needs to show an interval, we also recommend displaying information that is more representative, such as a scatterplot, violin plot, gradient plot, ensemble plot, quantile dotplot, or HOP. Just showing an interval alone could lead people to conceptualize the data as categorical. As alluded to in the prior paragraph, combining various uncertainty visualization approaches may be a way to overcome issues with one technique or get the best of both worlds. For example, each animated draw in a hypothetical outcome plot could leave a trace that slowly builds into a static display such as a gradient plot, or animated draws could be used to help explain the creation of a static technique such as a density plot, error bar, or quantile dotplot. Media outlets such as the New York Times have presented animated dots in a simulation to show inequalities in wealth distribution due to race. More research is needed to understand if and how various uncertainty visualization techniques function together. It is possible that combining techniques is useful in some cases, but new and undocumented issues may arise when approaches are combined.

In closing, we stress the importance of empirically testing each uncertainty visualization approach. As noted in numerous papers, the way that people reason with uncertainty is non-intuitive, which can be exacerbated when uncertainty information is communicated visually. Evaluating uncertainty visualizations can also be challenging, but it is necessary to ensure that people correctly interpret a display. A recent survey of uncertainty visualization evaluations offers practical guidance on how to test uncertainty visualization techniques.

Click her to access the entire article in Handbook of Computational Statistics and Data Science