Companies are facing not only increasing cyber threats but also new laws and regulations for managing and reporting on data security and cybersecurity risks.

Boards of directors face an enormous challenge: to oversee how their companies manage cybersecurity risk. As boards tackle this oversight challenge, they have a valuable resource in Certified Public Accountants (CPAs) and in the public company auditing profession.

CPAs bring to bear core values—including independence, objectivity, and skepticism—as well as deep expertise in providing independent assurance services in both the financial statement audit and a variety of other subject matters. CPA firms have played a role in assisting companies with information security for decades. In fact, four of the leading 13 information security and cybersecurity consultants are public accounting firms.

This tool provides questions board members charged with cybersecurity risk oversight can use as they engage in discussions about cybersecurity risks and disclosures with management and CPA firms.

The questions are grouped under four key areas:

- Understanding how the financial statement auditor considers cybersecurity risk

- Understanding the role of management and responsibilities of the financial statement auditor related to cybersecurity disclosures

- Understanding management’s approach to cybersecurity risk management

- Understanding how CPA firms can assist boards of directors in their oversight of cybersecurity risk management

This publication is not meant to provide an all-inclusive list of questions or to be seen as a checklist; rather, it provides examples of the types of questions board members may ask of management and the financial statement auditor. The dialogue that these questions spark can help clarify the financial statement auditor’s responsibility for cybersecurity risk considerations in the context of the financial statement audit and, if applicable, the audit of internal control over financial reporting (ICFR). This dialogue can be a way to help board members develop their understanding of how the company is managing its cybersecurity risks.

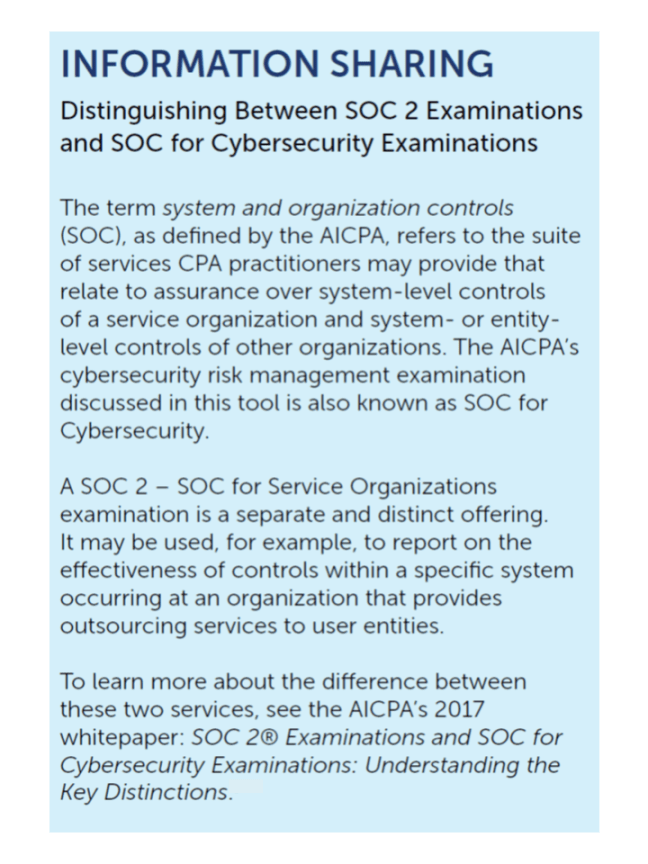

Additionally, this tool may help board members with cybersecurity risk oversight learn more about other incremental offerings from CPA firms. One example is the cybersecurity risk management reporting framework developed by the American Institute of CPAs (AICPA). The framework enables CPAs to examine and report on management-prepared cybersecurity information, thereby boosting the confidence that stakeholders place on a company’s initiatives.

With this voluntary, market-driven framework, companies can also communicate pertinent information regarding their cybersecurity risk management efforts and educate stakeholders about the systems, processes, and controls that are in place to detect, prevent, and respond to breaches.

Click here to access CAQ’s detailed White Paper and Questionnaires